Businesses are increasingly embracing Generative Artificial Intelligence (GAI) to drive efficiency and achieve results. Unfortunately, many are unaware of the potential risks of deploying AI systems without proper guardrails. Left unchecked, AI can lead to biased decision-making, data leaks, and hallucinations, which can quickly become a liability that damages a company’s reputation and bottom line.

Understanding the Risks of Prompt Overloading

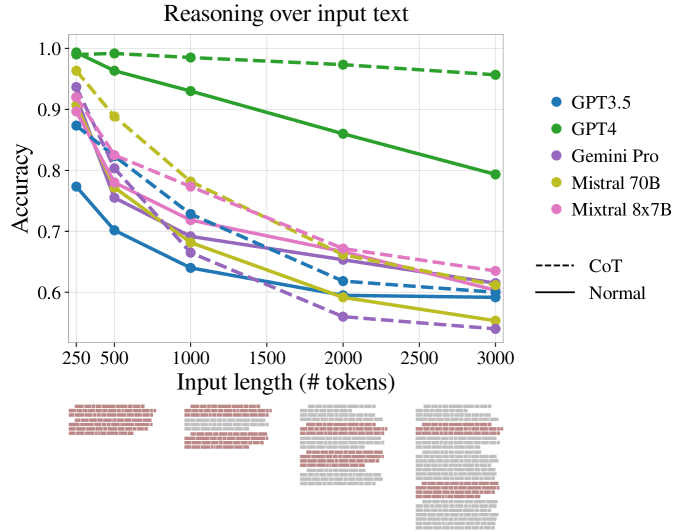

Prompt engineering, a method frequently used to direct AI behavior, entails designing specific inputs, or prompts, to steer AI outputs. While effective in some instances, recent research titled “Same Task, More Tokens: The Impact of Input Length on the Reasoning Performance of Large Language Models” explores the limitations of relying solely on elaborate prompts. The study reveals that the accuracy of Large Language Models (LLMs) decreases as the input length increases. Consequently, simply adding more guidelines to the prompt does not guarantee consistent reliability in generating dependable generative AI applications. This phenomenon, known as prompt overloading, highlights the inherent risks of overly complex prompt designs.

Aporia’s CEO and co-founder, Liran Hason, believes it falls short in safeguarding AI applications against more complex threats like hallucinations and prompt injections. “Prompt engineering is a time-consuming process that often leads to incomplete, high-maintenance policies,” explains Hason. “It simply doesn’t provide the comprehensive protection needed to ensure AI safety.”

The Drawbacks of Prompt Engineering When Trying to Safeguard GenAI Apps

Prompt engineering requires constant updates and fine-tuning to handle the ever-evolving nature of AI-generated content. This method can be extremely time-consuming and often results in policies that are difficult to manage and maintain. Additionally, it struggles to effectively prevent AI from generating harmful or nonsensical outputs, such as hallucinations, where the AI produces content based on misinformation or entirely fabricated data. Moreover, prompt injections, where malicious users craft inputs to manipulate the AI’s responses, remain a significant challenge.

The Benefit of Guardrails

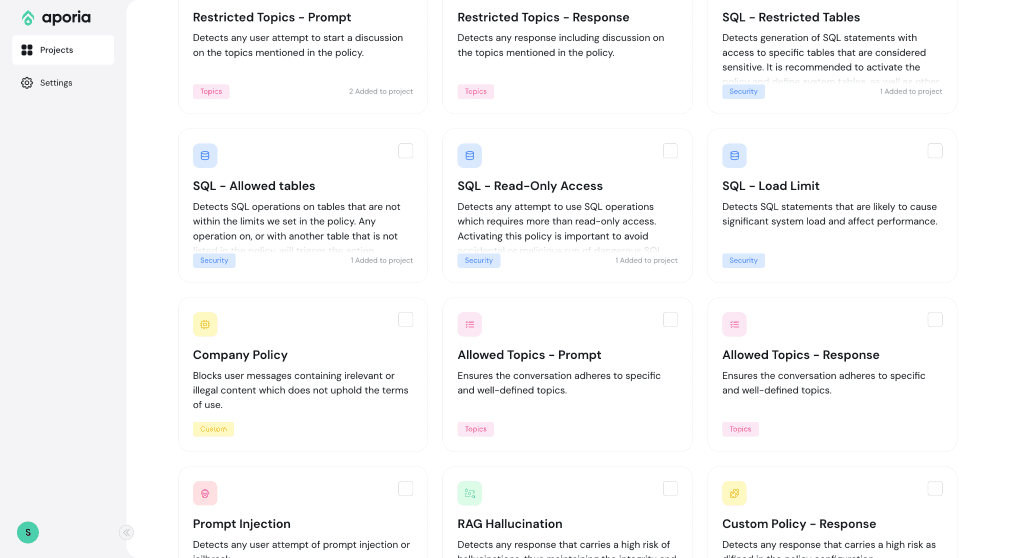

Aporia’s CEO advocates for a different approach: implementing guardrails that stand between the LLM and the user. Unlike prompt engineering, guardrails provide a robust, real-time solution that ensures AI systems operate within predefined boundaries. These guardrails continuously monitor AI outputs, preventing unwanted behavior and mitigating risks such as data leaks and biased decision-making.

“Aporia Guardrails offer a low-maintenance, high-efficiency solution with sub-second latency,” says Alon Gubkin, Aporia’s Co-Founder and Chief Technology Officer (CTO). “They provide clear, defined policies that adapt to the unique needs of each business, ensuring AI interactions consistently align with the company’s values and objectives.”

Tailored AI Safety for Unique Needs

One of Aporia’s key strengths is its flexibility. The platform allows businesses to define and enforce AI policies and guidelines tailored to their specific industry, use case, and risk tolerance. This ensures that AI interactions consistently align with the company’s values and brand identity.

“Every organization has unique requirements for AI safety,” says Hason. “Aporia’s customizable policies enable businesses to strike the right balance between innovation and risk management, confident that their AI deployments are effective and responsible.”

Aporia Labs: Staying Ahead of AI Threats

To stay ahead of the evolving AI threat landscape, Aporia has established Aporia Labs, a dedicated research and development division staffed by AI and cybersecurity experts. Aporia Labs proactively identifies emerging vulnerabilities and develops cutting-edge solutions to keep their customers one step ahead.

“Contrary to what many companies believe, AI threats are not static; they are constantly evolving as the technology advances,” explains Hason. “Our team at Aporia Labs takes a proactive stance, identifying emerging vulnerabilities and developing solutions to keep our customers one step ahead.”

The Cost of Inaction

Neglecting AI reliability can lead to severe consequences, including financial losses and reputational damage. Companies that prioritize AI safety and responsible deployment will have a significant competitive advantage. “They will be better positioned to build trust with stakeholders and better prepared for the inevitable rise of AI governance regulations,” asserts Hason.